MEDIA DAY AT DARPA SUBT CHALLENGE URBAN CIRCUIT

SubT Challenge Urban Circuit Overview

A few weeks ago I had the pleasure of attending media day at the DARPA Subterranean (SubT) Challenge Urban Circuit on behalf of Open Robotics. My intent was to cover the event at a level of detail much higher than the traditional media outlets. In particular, I wanted to show the ROS community how the robots were built, the subsystems involved, the competition strategies, and what the competition was like overall.

If you are unfamiliar with the SubT Challenge, it is a competition put together by DARPA to spur innovations in robots that are able to autonomously navigate subterranean environments. The SubT Challenge is the latest incarnation of DARPA robotics challenges, with prior programs including the DARPA Robotics Challenge (humanoid robots), the DARPA Urban Challenge (autonomous city driving), and the DARPA Grand Challenge (autonomous ground vehicles). The SubT Challenge was designed to mimic the conditions found in dangerous subterranean emergencies such as collapsed mines or caves, damaged subways during a hurricane, or a nuclear power plant after a melt down.

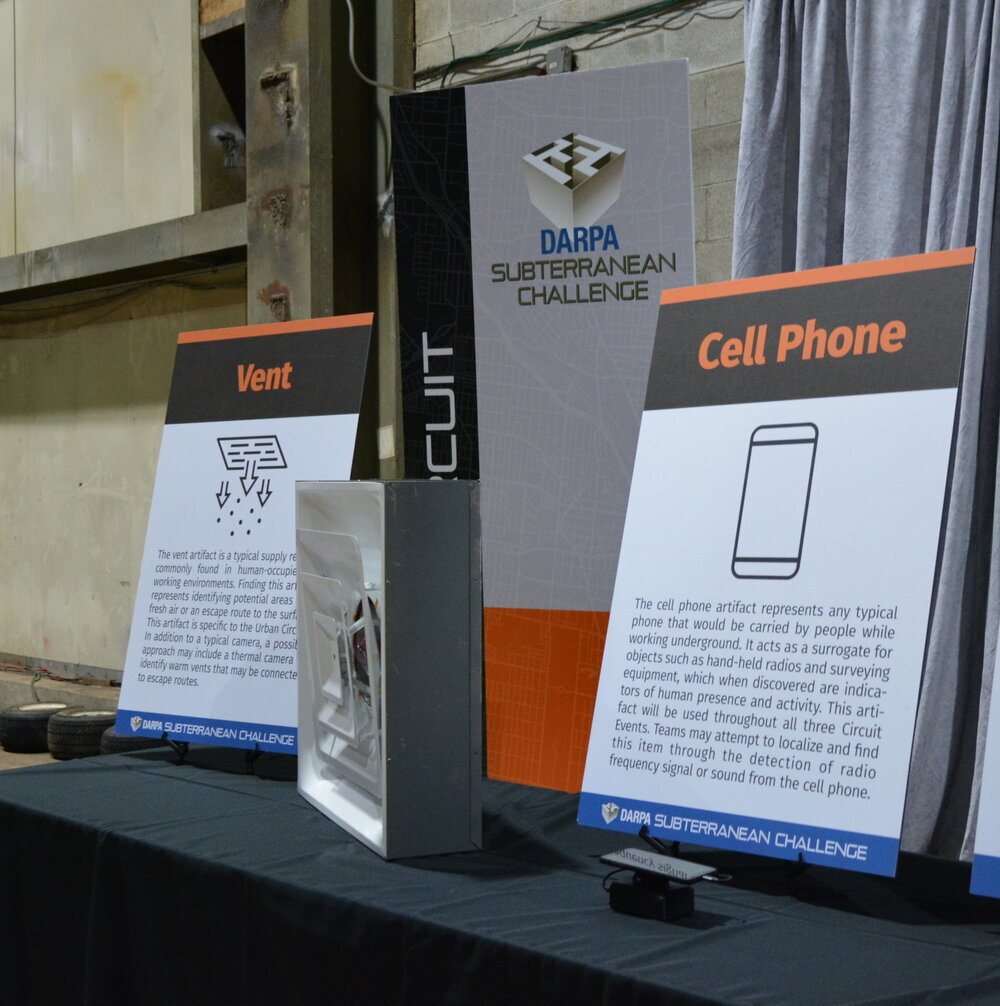

The competition itself consists of a large subterranean course filled with "artifacts" such as backpacks, vents, cell phones, gas leaks, and simulated human survivors. The artifacts are fairly realistic in that the gas leak is an actual CO2 emission, while the "survivor" and vent are both heated for added realism. During the competition each team is able to send multiple robots into the course for an hour, and points are awarded based on how many artifacts are correctly located. While this competition sounds relatively straight forward, the task is actually quite difficult for most robots. The difficulty is due to the lack of GPS reception, the dark and awkward terrain, and the difficulty in establishing a wireless connection back to the robot's operator. DARPA has released this video of the course for the previous Tunnel Circuit event that gives a pretty good overview of the complex environments they would like to explore.

In addition to the physical competition, or as DARPA calls it, the Systems Competition, there is also a Virtual Competition happening concurrently. This Virtual Competition uses ROS and Ignition Gazebo to create a high fidelity simulation analogous to that of the Systems Competition. Competitors are allowed to create a team of robots from stable pre-configured virtual robot models. Once the teams have selected their robots, they then develop the autonomy software that will drive their robot team. During the competition the robots are deployed to a novel simulation environment and scored on how many artifacts they find. DARPA put together this video of the Tunnel Circuit which gives a good overview of what the Virtual Competition looks like. It is worth noting that there are a few teams that compete in both the Virtual Competition and the Systems Competition.

The Facility

The SubT Challenge Urban Circuit was held at the Satsop Industrial Park in the Olympic Peninsula of Washington state. To be honest, Satsop isn't really an urban area, and the industrial park isn't really an industrial park. To the locals Satsop is called, "Whoops," which comes from Washington Public Power Supply System (WPPSS), but that name also describes what happened at the location. In the late 1970s and early 1980s the area was slated to be the location of a new nuclear power plant, but a public ballot initiative stopped the municipal bonds that funded the operation, and construction of the plant was eventually halted. There were never plans to demolish the site, and the facility was recently opened up to business development. The size and scope of the facility is massive, and while the area is not itself "urban" the interior of the buildings is certainly reminiscent of an underground train or subway station. Since the facility is unfinished there are areas where re-bar sticks out of the ceiling, floor, and walls, and the facility looks eerily like what Civic Center Station in San Francisco might look like after "The Big One." The location in the Olympic peninsula only adds to the creepiness factor; as I was driving through the area the scenery reminded me of old episodes of X-Files and Twin Peaks.

Late Night Practice & Team CoSTAR

I arrived at the competition late the night before, but thankfully not too late. Elma, where Satsop is located, is a very small town, according to Wikipedia 600 people. There is just one real hotel and a handful of restaurants; the only thing open past 7:30 is the Burger King. I had a quick burger at the local greasy spoon, and I asked if they knew anything about all the robots running around at the old nuclear facility a few miles outside town. They didn't seem to know anything but didn't seem surprised. I returned to the hotel to answer a few e-mails and turn in early. After about an hour I heard what sounded like children repeatedly running up and down the hotel hallway. After the fourth or fifth race I poked my head out of my room to see if there were any parents nearby and to my surprise a [Boston Dynamics Spot robot][0] whizzed by.

I followed the robot down the hall at a safe distance to where its tenders were congregating. When I introduced myself and asked about their robot, they said they were a sub-group of Team [CoSTAR][1] which is a collaboration between Caltech, JPL, MIT, KAIST, and Lulea University of Technology in Sweden. As with most of my conversations I asked if it was running ROS. Someone with the team told me that it was and that there was a ROS node interfacing with Spot API. On top of Spot was a rather large sensor payload. According to Spot's minder the team had only gotten Spot recently, and they had adapted the sensor and compute payload from a previous robot to fit Spot. As far as I could see there were three depth cameras, a Velodyne LIDAR, and what I believed was a wireless repeater (also called a breadcrumb) deployment mechanism mounted on back. The LIDAR had a gorgeous cage and a mast that appeared to be an OEM component. All of this was is in addition to the Spot's built-in sensor suite. According to one of the robot minders, since the Spot was so new, they still hadn't worked out the bugs, and in particular they were concerned with mapping and navigating stairs. They were using the hotel to refine their planning algorithms. This was a bold strategy that paid off during the competition the following day when [Spot successfully traversed the stairs][2]. After talking with the team for a bit, I bid them farewell and left them to practice.

The Competition

Team CoSTAR must have been practicing all night as I awoke the next morning at 5 a.m. to the pitter patter of little robot feet. After some breakfast and a few cups of coffee I made my way up to Satsop facility. The start of the media day began with a briefing by DARPA about the event. While we were waiting for the meeting to start the other reporters attending started talking about seeing Team CoSTAR the previous night. The DARPA briefing outlined the day and gave an overview of the event and its goals. What was notable from the presentation was the scale of the event. If you have ever been to a regional FIRST robotics competition, it was similar in size. The event included 160 team members and DARPA had over 80 staff members on-site to administer the event. DARPA has also put quite a bit of thought into crafting the event such that it really pushes the capabilities of the teams in the competition. Between the realistic artifacts and course conditions it was pretty obvious that a competitor would need to truly push their robot's capabilities to find even 80% of the artifacts. Later, while touring one of the competition courses, and seeing a cell phone artifact placed up near the rafters, it became truly apparent that a successful team would need to far-exceed the abilities of a human search and rescue crew. Moreover, the teams are required to report the position of the artifacts to within an envelope of 5m, using a global map constructed by the competitors at run time. In the case of the cell phone in the rafters, this means that robot would not only need to find the object but also localize a target with a high degree of accuracy. This makes it almost impossible to win the competition without coordinating multiple robots with different sensing modalities.

Team NUS SEDS

The first team we encountered on the media day was National University of Singapore Students for Exploration and Development of Space (NUS SEDS). As far as I could tell, the team was exclusively undergraduate and graduate students. In my brief time with the team members, they said that their focus was creating a low cost platform that was still capable of competition. DARPA literature claims the team had three types of robots but the only one on display was their omnidirectional ground vehicle. This vehicle's design was simple, similar to a stripped down Mars rover. Considering that they built the entire robot themselves, the final fit and finish was quite impressive. Most of the robot was 3D printed with the exception of the bent sheet metal chassis and the wheels mounted to hobby servos. The sensor array was limited by the price point of the robot and consisted of a RealSense depth camera, an RPLIDAR, and a second camera that I believe to be some sort of FLIR sensor. The sensor suite was fairly constrained compared to other competitors but probably sufficient for basic autonomy. Like many of the other teams, NUS SEDS had a "breadcrumb" solution for maintaining wireless connectivity within the competition track. From what I could gather these breadcrumbs were some sort of circuit board mounted in a small 3D printed enclosure. Overall these were the smallest and simplest breadcrumbs I saw, but also the most polished.

Team Coordinated Robotics

After chatting with NUS SEDS for about ten minutes, we got pulled away to watch Coordinated Robotics' run. What's interesting about Coordinated Robotics is that this was the first competition event for all but one of their team members. In the previous SubT Challenge Virtual Competition event, Team Coordinated Robotics won first place with a fairly adept autonomous robot team. If you are curious about what it takes to win the Virtual Competition with a single team member, you should check out this interview with team leader Kevin Knoedler. Since the last Systems Competition event and his win in the last Virtual Competition event, Kevin has grown his Systems Competition team to include students from California State University Channel Islands and a local middle school.

The Coordinated Robotics robot team for the Systems Competition consisted of two ground vehicles and a small number of other robots (I belive a drone and a smaller UGV, that I didn't get to see launch). According to my brief conversation with Kevin the ground vehicles are re-purposed from a security robot start-up that recently went under, which I am sure saved him a lot of time and resources. Six months is not a lot of time to design and build two unmanned ground vehicles, and Coordinated Robotics looked like they had made the best of the tight deadline and small crew. In terms of sensor suite, the robot had the standard Velodyne puck on a simple extruded aluminum mast and a forward mounted depth camera. I didn't see any additional cameras and I believe the large LED arrays are to obviate the need for a FLIR camera. I've worked with FLIR cameras in the past and they are a bit difficult to handle, and avoiding them probably minimizes complexity. In lieu of wifi breadcrumbs the Coordinated Robotics team appears to be using a 2.6GHZ antenna usually used for FPV drone racing. I am not sure if this is just for video, communication, or both. Considering the size of the team and the tight schedule I was really impressed, and a bit jealous of Kevin's ability to bootstrap a competitive team.

Team Robotika

After watching Coordinated Robotics we were ushered back to the Team Garages at the Satsop Facility to meet with Team Robotika. Robotika is collection of Czech, Swiss, and US groups joining forces to participate in the competition. The diversity of the team is plainly apparent by the diversity of robotic platforms they had on display. In addition to being a Systems competitor, Robotika is also competing in the Virtual Competition, and is one of the few teams taking advantage of simulation to drive real world autonomy. You can read all about their Virtual Competition performance on their blog.

The crown jewels of Robotika's team are the Kloubak K2 and Kloubak K3 robots. These robots are novel because of their articulated chassis with levered axles, which allow the robots to navigate over broken terrain and larger obstacles. This became particularly important in the Urban Circuit as the course had a number of concrete "curbs" that ran through the facility. As far as I could gather these curbs functioned as a sub-floor upon which grate flooring would have been placed (like the flooring used in a server room). These curbs were approximately 6 to 8 inches high and represented significant obstacles to some of the smaller wheeled robots. You can check out the project's blog if you would like to read a bit more about the design decisions involved. It is wonderful to see a team iterate on a core idea and then bring it to the field. From what I can gather from the blog the articulated joint between the sections of the robot have a potentiometer and Hall effect sensor apparatus for determining the relative position between the articulated sections. If you click through on the project blog there are some great shots of the universal joint that attaches sections of the K3 robot. As far as I could tell the K2 robot had multiple RealSense sensors, front and rear line scan LIDARs, and a higher resolution camera. The K3 platform is similar but omits the depth camera. The K3 also appears to have two sensor housings up front but I can't determine what they are exactly. They appear to be cameras pointed towards the ground, perhaps for visual odometry. The website indicates the K2 has an onboard Jetson Nano running off of 36V hoverboard batteries.

In addition to the K2/K3 robots, Robotika had a few other robots to show off, including two smaller, less expensive platforms. The first is "MOBoS" which is a small wheeled vehicle sporting a light and two depth sensors. There was a small "Maria" tracked vehicle with a similar sensor array. It is not clear what Robotika's strategy was for wireless coverage, but given the number of antennas on both robots I wouldn't be surprised if they functioned as wifi relays. Finally there was the highly modified Eduro robot which had quite an impressive sensor array. The Eduro robot was originally developed as educational robot and sports multiple LIDAR and depth sensors.

Team CSIRO Data61

The Commonwealth Scientific and Industrial Research Organization (CSIRO) Data61 team is a collaboration between CSIRO and Georgia Institute of Technology. CSIRO is the Australian analog to America's South West Research Institute, and from what I could gather from talking with them, the SubT Challenge is quite similar to some work they presently do for mining operations in Australia. As such this team had some of the most polished and mature looking robots at the competition. The CSIRO Data61 team had in total six robots, two BIA5 Titan tracked vehicles, two slightly smaller SuperDroid LT2 robots, and two DJI Matrice 210 Drones. All of CSIRO's stock robot platforms were heavily modified with additional sensor and compute payloads. Both the Titan and the Superdroid platforms had what appeared to be a shared sensor payload consisting of machined aluminum block that housed four cameras along with a spinning tilted Velodyne LIDAR within a safety cage. On top of the safety cage there appeared to be an additional sensor array along with a high power LED light, but it was unclear to me what kind of sensor it may have been. The complete assembly was very polished and probably offered high-fidelity 360 views. Notably absent were any sort of depth cameras or downward facing cameras, which leads me to believe the spinning and tilted LIDAR covers most of the ground around the robot.

What I found particularly interesting about the CSIRO team was that the larger Titan robot had a drone launching, and presumably landing platform which looked like it was a big engineering project. From what I could gather the launch pad had actuators to secure the drone on transport and, given the size of the Titan, I would assume it had sufficient power to re-charge the drone. The drone itself is an off the shelf DJI drone with the addition of a gimbal- mounted camera and what I presume to be a rotating LIDAR. To make all that kit work there was also a compute and communication payload. The stock DJI drone has an operating time at max payload of 24 minutes, and simply allowing the drone to land and take off could really extend that capability over the duration of the one hour course run. Surprisingly, with all that payload, the drone can still fly. One of the big highlights of the competition was that the drone was able to successfully launch, find an artifact, locate it correctly, report the results, and return. You can see a short clip in this highlight video.

Team NCTU

The NCTU team is a fan favorite. This wonderfully pleasant team of graduate and undergraduate students is from the National Chaio Tung University in Taiwan. I would characterize their approaches to the problems of SubT Challenge as "highly innovative". The reason SubT Challenge fans love NCTU is that they are the only team daring enough to use a blimp as their aerial platform. For the Urban Circuit the blimp had to take a bit of a diet (more like a fishing line corset) to squeeze through the tight passageways. This DARPA video from the Tunnel Circuit in August gives a good overview of the team. It is worth giving the robots a good look in the video as they have evolved significantly since the last Circuit. If you are interested in the specifics of the blimp, the students published a paper about the Duckiefloat on Arxiv. In the Urban Circuit the general blimp configuration remains the same with a Jetson Nano, a Raspberry Pi, a single RealSense depth camera, and controllers for motors and illumination. I believe that the students have also added an array of Mistral millimeter wave radar modules around the exterior of the blimp to assist in navigation (and to avoid getting stuck).

While the blimp steals the show, I was actually more impressed with the team's Husky robots. The team's Husky robots were loaded down with every sensor and the kitchen sink, and I am pretty sure every student had a hand in building a subsystem of the robot. On the front of the robot, there were two RealSense sensors along with a small M12 wide angle lens which I believe to be either a NIR or FLIR camera. On the right and left corners of the robot, there was a sensor array that consisted of another millimeter-wave radar unit, a RealSense depth camera, and a ReSpeaker board. There was also a mast assembly with a Velodyne puck LIDAR, a third ReSpeaker, and two additional millimeter wave radar boards. The ReSpeakers were a novel sensor for the course and I believe they had two applications, locating sound emitting artifacts (like the cell phone) and locating the team's wireless breadcrumbs.

Everything about NCTU team was unique and innovative, and that includes their communication breadcrumbs. NCTU's breadcrumbs weren't just wireless relays; they included both a camera and a speaker. The breadcrumbs emit a high frequency sound that the robots could servo to if disconnected. Given that the course was a cavernous area made of rebar steel and concrete, this makes some sense. The concrete isn't great at transmitting radio waves and the rebar probably acts as a Faraday cage on top of that. I wouldn't be surprised if under certain conditions sound travels farther than wifi (I'm sure there will be a paper sooner of later). The Husky robot had deployment slots for up to three breadcrumbs. One of the team members also showed off a spherical robot breadcrumb that the team hopes to build in the future. The idea is that a mobile breadcrumb system could re-configure itself based on changing conditions and even perform additional search operations while a main robot expands the mapping frontier.

Team Explorer

Towards the end of the day we had some time to check out a few of the other Team Garages. Unfortunately, I didn't get a chance to talk with Team Explorer but I did get a chance to stroll by their garage. Team Explorer is a collaboration between Carnegie Mellon University and Oregon State University and features the university's own custom UAV and UGV. The Explorer UGV is massive, and if I had to guess it is probably one of the largest, if not the largest robot in the field. The UGV served as a platform for the team's UAV which you can see in action in the competition videos. From what I could see I believe the UAVs are simply passengers on the UGVs as I couldn't make out a real charging or docking mechanism. I didn't get a great shot of the front of the UGVs or the sensor suite, but from what I can gather they have a custom machined square aluminum sensor array much like Team CSIRO Data61. Inside the sensor array are at least four of what I believe to be RealSense depth cameras along with some high power LEDs. On top of the sensor array is a Velodyne puck and a fifth depth sensor pointed towards the ceiling for good measure. If you look at the image above you can also make out the fans; with so many sensors I am going to guess that aluminum box gets mighty hot.

Similar to their UGVs, Explorer's UAVs are massive, with a similarly large sensor payload. The UAVs are custom quad copters that break with tradition and have ground facing rotors. The airframe appears to be made out of carbon fiber with an aluminum crash cage around the periphery and a custom carbon fiber shell. Unfortunately, I wasn't able to peek inside the case and see what the compute and power distribution infrastructure looked like. I would assume most of the interior consists of batteries as most of the drones had massive sensor arrays. I got a close up of one of the sensor arrays you can see below. It had three RealSense depth cameras angled up, down and forward, plus a Velodyne puck for good measure.

Team MARBLE

Last, but certainly not least among the SubT Challenge teams that I got to visit with was Team MARBLE. MARBLE is a witty, DoD ready acronym for Multi-agent Autonomy with Radar-Based Localization and Exploration. The team is a DARPA-funded collaboration between University of Colorado Boulder, University of Colorado Denver, and Scientific Systems Company Inc in Boston. Team MARBLE focuses on ground vehicle autonomy and perception, and as such they use highly modified stock UGVs as their mobility platform. According to DARPA the team has three Clearpath Husky robots, one SuperDroid LT2-F, three SuperDroid HD2s, and two Lumenier QAV500 quadcopters. Unfortunately I was only able to get up close to the Husky and LTF-2 variants. Both the Husky and and the LTF-2 come equipped with a team developed sensor mast. The mast has three Occipital Structure Sensors, one forward facing, one ground facing, and one connected on a custom pan-tilt rig. Also on the pan-tilt rig is what I believe to be some sort of FLIR camera but I couldn't determine the model. Above all of these sensors is a super high power LED light. Sandwiched in between the two sensor arrays is a Ouster OS0 LIDAR with a 90 degree field of view. I really like this approach of actuating the sensor array, while it adds complexity to the build it does lessen the number of sensors needed, or obviate the need for the robot to move to map an area. Since MARBLE's name includes "radar" I am going to assume that below the sensor mast is where the radar array is mounted. I was unable to figure out what brand of radar sensor, though it kind of looks like a SlamTec ToF LIDAR. If you happen to know what the sensors are leave a comment below.

Team MARBLE also had the most refined communication breadcrumb at the competition. Both the Husky and LT2-F robot appeared to have rear-mounted slots for up to three breadcrumb repeaters. The breadcrumbs themselves were quite interesting as they had actuators that lifted the antennas a good two feet up into the air. From my discussions with the team, the antennas really did improve the range of the breadcrumbs. The "linear actuator" for each breadcrumb is actually from Mazda Miatas radio antenna. That's right, it is an antenna that deploys antennas.

Due to time constraints and the competition schedule, I didn't get a chance to meet with the last two teams described here but was able to find a bit of information about both teams.

Team CERBERUS

CERBERUS is a creative acronym for: "CollaborativE walking and flying RoBots for autonomous ExploRation in Underground Settings." The team is a collaboration between some heavy hitters in robotics including University of Nevada Reno, ETH Zurich, Sierra Nevada Corporation, University of California Berkeley, Flyability SA, and the Oxford Robotics Institute. The robots on this team are just as creative as the team name and the organizations behind the team. DARPA lists the team as consisting of two ANYbotics ANYmal-B robots, an Inspector Bots SuperMegaBot, a SuperDroid IG52-DB4 4WD, three DJI Matrice 100s, and two Flyability Gagarin robots. From looking at a few of the team's videos, it appears that their wireless communication approach is to use their wheeled platforms (SuperDroid, SuperMegaBot) as mobile repeaters that can also search for artifacts. According to a concept of operations video released by the team, they intend to use an ultrawide band mesh networking approach to wireless connectivity. The same video also indicates that the robots will include LIDAR, RADAR, FLIR, NIR, and RGB cameras, as well as inertial measurement units for SLAM.

I dug around and found a few videos DARPA released of team CERBERUS, including some really impressive perception videos, along with the team's YouTube channel.

Team CTU-CRAS-NORLAB

CTU-CRAS-NORLAB is a collaboration between the Center for Robotics and Autonomous Systems at Czech Technical University in Prague and the Northern Robotics Laboratory at Laval University, Canada. According to DARPA literature, their robot team consists of a Clearpath Robotics Husky, three BlueBotics ABSOLEM robots, two DJI Flamewheel F450s, and three Trossen Robotics PhantomX AX Hexapod Mark II. I believe for both the Urban and Tunnel Circuits the team mainly used the aerial and ground vehicles, with the hexapods being held in reserve for the Cave Circuit in August. I was unable to tell precisely what the team's communication strategy was, but considering their performance it must be working for them. In the absence of any close up shots of the team, I was able to pull together links to a number of the team's videos that are worth watching.

Center for Robotics and Autonomous Systems website with a lot of videos of the robots in action.

Tunnel Circuit video with good close up shots of the robots.

Wrapping it Up

The leaderboard above gives the final scores for all the teams in the SubT Challenge Urban Circuit (Systems Competition). I think what's notable is that the spread of scores really isn't that wide, and there are still quite a few points on the table. While there is a spread between the DARPA-funded teams and the self-funded teams, it isn't an insurmountable lead. Moreover, this is the first competition event for much of the Coordinated Robotics team and they were able to make it to the middle of the field with a very small team and only prototype robots. From what I saw, a team with strong autonomy skills could very likely dominate or place highly with a well coordinated set of robots. My conjecture is that some of the teams competing in both Systems and Virtual Competitions might have an advantage in the long run as they have a tighter build/run/debug loop to both work out the bugs in their systems and test their strategies.

The other thing I find really interesting is the ability of the Coordinated Robotics team to be a contender in both domains. The leaderboard above shows the scores of the Virtual Competition, and Coordinated Robotics also managed to place second despite spending time on hardware integration for the Systems Competition. The Virtual Competition environment did a good job of mirroring the conditions in the Systems Competition course, and I think that experience, even if it is virtual, helped drive team design decisions for the Systems Competition robots. Similarly, Team Robotika competes in both the Systems and Virtual Competitions, and we've heard rumors that virtual analogs to their physicals platforms might just make it into the Virtual Competition. The Cave Circuit will present a number of mobility challenges and innovation in platform designs might just be necessary.

It is worth mentioning that there is still time to register to compete in the Cave Circuit in August 2020 and the Final Event in 2021, for both Systems and Virtual Competitions. I know many people are stuck at home right now with extra free time, and playing with the SubT Simulator is a great way to brush up on your ROS and Ignition Gazebo skills even if you have some hesitation. The best way to get started is to check out the SubT Virtual Testbed on Bitbucket. There is also pre-configured Docker container - I was able to fire the container up and have a robot running around in a matter of an hour. It is worth noting that registering for the competition just keeps you up-to-date on DARPA announcements, and you don't need to qualify until a couple months before the event. If you are interested in competition details including prize amounts, they can be found on the DARPA SubT Challenge website. OpenRobotics is also in the process of creating a set of comprehensive tutorials to get you started with the SubT Virtual Testbed - you can find the first tutorial here. In the next few weeks, we will add additional materials to help you learn how to use the software to build your own virtual robot. Our hope is that the guides not only serve the SubT community but also allow those interested in robotics a fast and easy way to get started with ROS and Ignition Gazebo.

I hope to attend media day at the Cave Circuit in August to report on how the robots have evolved. Hopefully next time I will be able to sit down with a few of the teams and talk to them about their software stack and their strategies for winning. I would also like to hear from the ROS community about what they're interested in knowing about the SubT Challenge and the competitors. This time I chose to focus on the hardware, but in the future I would like to learn more about the software architecture behind the teams and the multi-robot systems. This is the rare chance where we have a lot of roboticists in one location not just talking about robots but actually working with them to solve a problem.

Please let me know what you would like to learn more about in the comment section.

(Approved for Public Release, Distribution Unlimited)